AI Chip Race Heats Up as Startups Challenge Nvidia’s Dominance

In the rapidly evolving world of artificial intelligence, a new battleground is emerging: the development of specialized computer chips for AI inference. While Nvidia has long dominated the AI chip market with its powerful GPUs, a growing number of startups and traditional chipmakers are now vying for a slice of the lucrative AI inference market.

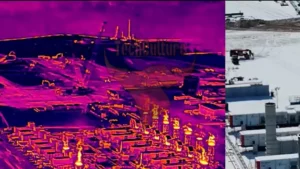

AI inference, the process of deploying trained AI models to perform tasks, is becoming increasingly crucial as businesses seek to implement AI solutions at scale. While GPUs have been instrumental in AI training, their limitations in inference tasks have opened the door for innovative chip designs tailored specifically for this purpose.

Companies like Cerebras, Groq, and d-Matrix are at the forefront of this new wave of AI inference chip development. These startups are joined by industry giants AMD and Intel, all aiming to create more efficient and cost-effective solutions for AI deployment.

One such company, d-Matrix, has been making waves with its Corsair product. Founded by industry veterans, the startup faced numerous challenges in developing its innovative chip design. The company’s journey from concept to manufacturing highlights the complex process of bringing new AI hardware to market.

The target market for these specialized AI inference chips extends beyond tech giants to Fortune 500 companies across various sectors. Potential applications range from AI-powered video generation to more efficient data processing in enterprise environments. However, cost and integration considerations remain key factors for potential adopters.

The development of AI inference chips carries significant implications for both business costs and environmental concerns. These specialized chips have the potential to dramatically reduce energy consumption in AI applications, addressing growing concerns about the environmental impact of AI technologies.

As the AI inference market continues to grow, industry experts predict it could rival or even surpass the AI training market in terms of opportunity. This shift could have far-reaching consequences for the future of AI development and sustainability.

The race to develop efficient AI inference chips represents a critical juncture in the evolution of artificial intelligence technology. As competition intensifies, the resulting innovations are likely to shape the landscape of AI applications for years to come, potentially democratizing access to advanced AI capabilities beyond the realm of tech giants.